- This topic has 38 replies, 9 voices, and was last updated 1 year, 6 months ago by

schurmb.

-

AuthorPosts

-

April 16, 2021 at 4:54 pm #105120

Correct, I am speaking about Citrix.

For our externally facing thin clients logging in to Citrix using Azure MFA SAML there appears to be a 15 minute timeout and i’m trying to figure out if that is controlled on the Azure Federated Services side, or something on the Citrix side, but I don’t think its the thin client side. The reason I don’t think its the thin client side is in the Citrix Broker Settings the Timeout listed there is 300 in seconds which is 5 minutes and my timeout is taking 15 minutes

[2021-04-16 07:36:34.874] N/WebUIProc/WebUIModule (1/1) [Citrix] login to broker “https://xxxxxxx.xxxxxxxxx.com/Citrix/NSWEBFAS”

[2021-04-16 07:36:34.874] N/WebUIProc/WebUIModule (1/1) [StoreFront] roaming “https://xxxxxxxx.xxxxxxxxxx.com/Citrix/NSWEBFAS”…

[2021-04-16 07:36:35.272] N/WebUIProc/WebUIModule (1/1) [StoreFront] gateway authorize “/vpn/index.html”…

[2021-04-16 07:51:36.017] N/WebUIProc/WebUIModule (1/1) [StoreFront] gateway authorize failed “/vpn/index.html”. error => Error: The login was cancelled, because wait user credentials timeout.

[2021-04-16 07:51:36.018] N/WebUIProc/WebUIModule (1/1) [StoreFront] roaming failed “https://xxxxxxxx.xxxxxxxx.com/Citrix/NSWEBFAS”. error => Error: The login was cancelled, because wait user credentials timeout.

[2021-04-16 07:51:36.021] N/WebUIProc/WebUIModule (1/1) [Citrix] login to broker “https://xxxxxxxx.xxxxxxxxxx.com/Citrix/NSWEBFAS” failed. error => Error: The login was cancelled, because wait user credentials timeout.Are your logs similar regardless of what auth method you’re using?

April 19, 2021 at 9:01 am #105128[2021-04-16 17:02:41.778] wms: connected to MQTT broker.

[2021-04-16 17:02:43.069] [Citrix] login to broker “server”

[2021-04-16 17:02:43.072] [StoreFront] roaming “***”…

[2021-04-16 17:02:43.973] [StoreFront] user authorize “***”…

[2021-04-16 17:23:42.586] [StoreFront] gateway authorize failed. error => Error: The session id is expired, please retry to log in the broker.

[2021-04-16 17:23:42.592] [StoreFront] roaming failed “***”. error => Error: The session id is expired, please retry to log in the broker.

[2021-04-16 17:23:42.597] [Citrix] login to broker “***” failed. error => Error: The session id is expired, please retry to log in the broker.My logs look similar, but I am not using Azure, but a “local” server.

September 10, 2021 at 10:27 am #106189Any luck with getting rid of this unwanted behaviour? This is pretty anoying!

“Starting broker sign-on” shows after restart, and the users have to wait until it times out with “Could not add account. Please check you account and try again.” Off course it won’t work, since no credentials have been entered.

Broker-url same as you have. Standard address to Storefront.

If you switch to old fashioned legacy url with pnagent, the behavior stops:

https://myserver.something/Citrix/store/PNAgent/config.xml

TC 5070 -> fw 9.1.3112, Citrix Workspace App 21.6.0.28

I’m very surprised to see that this hasn’t been fixed since this thread was started in April…

September 13, 2021 at 3:07 pm #106201Same behavior on thinos 9.1.3129.

Tested on 5070 and 3040.

March 17, 2022 at 6:37 am #107386Still no solution for this?

March 18, 2022 at 4:12 am #107395I still have the same issue with 9.1.6108 and the Citrix package v21.12.0.18.3. I still have not upgraded my production enviroment from ThinOS 8.6 because of this.

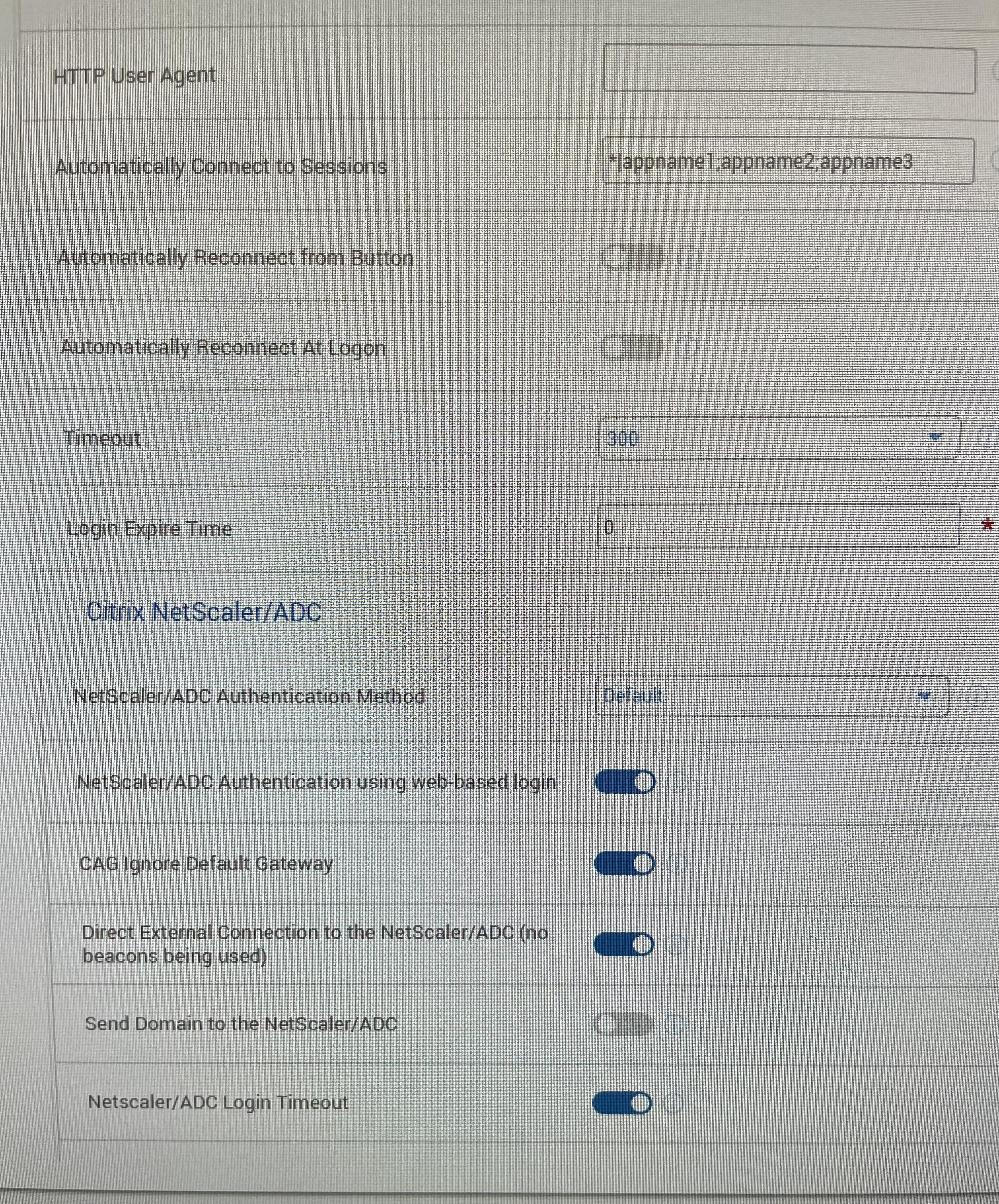

March 23, 2022 at 12:41 pm #107420Are you set to authenticate using web-based login?

March 23, 2022 at 12:54 pm #107421

March 23, 2022 at 12:54 pm #107421Netscaler version 13.0.83.27

March 24, 2022 at 3:04 am #107424Not using netscaler. Connecting directly to Storefront.

March 24, 2022 at 3:33 am #107426This same setting works on 5470 mobiles to a single URL, so it works internally to storefront and externally through netscaler. Have you tried the setting to see if it works regardless of your setup?

March 24, 2022 at 3:42 am #107427Just tried it.

Changed the setting, logged on, logged off, waited 20 minutes and logged on again, same issue.

‘The session ID is expired, please retry to log in the broker.’

March 24, 2022 at 4:42 am #107428Strange thing is that on our Storefront servers (server group) in our central datacenter, the issue does not occur.

These 2 storefront servers are used for internal and external use.

The issue occurs on our storefront servers in the local datacenters of the mills.

Only one storefront server is deployed here for internal use.

The session settings on the central and local storefront servers are the same.

The central storefront servers are loadbalanced on our internal loadbalancer (not netscaler).

March 24, 2022 at 4:59 am #107430Anything in the logs from the storefront server? Might be worth a packet capture and analysis to see which side of the conversation is initiating a time out?

I can understand this must be frustrating and I can only provide what works for me in my organization. It’s likely something that differs between our infrastructure configuration.

just thinking out loud here, doesn’t happen in your main central data centers but it does in the mills (branch offices I’m guessing). Are those “branch” location connected “internally” through a GRE tunnel? Any WAN acceleration between them? That can all add to packet overhead, we’ve had a few remote location going across a GRE tunnel where we’ve dropped the MTU size in WMS from the default of 1500 to 1340 and that corrected some connectivity issues for those locations.

Again, not the same situation and I don’t know your infrastructure but we found the issue through deep packet inspection and found oversized packets being fragmented and one side of the conversation would then stop. Lowering the MTU from default helped correct it. Also allowing UDP 1494 and 2598 has helped internally as well.

March 24, 2022 at 5:19 am #107432The branch locations have their own Citrix setup.

So local storefront, controller and Citrix servers. The Site database and WMS are hosted in the central datacenter.

The thin clients in the branches connect to the local storefront server, so only local traffic, no wan connection.

I pointed one of the thin clients in the local branch to the central storefront server and the issue is gone.

Whe have UDP enabled for the Citrix sessions.

March 24, 2022 at 9:28 am #107434Sounds to me like something is different in the storefront configurations then. I know you say they’re identical but I’d take another look really closely.

-

AuthorPosts

- You must be logged in to reply to this topic.